Products You May Like

From conveying precise instruction to evoking entire new worlds, words and their meanings are central to our existence as humans. But how the multitude of cells making up a human brain take abstract noises or symbols and convert them into something with meaning has long been a mystery.

New techniques that can track brain activity down to a single neuron are now revealing exactly where this sound translation takes place within our minds.

“Humans possess an exceptional ability to extract nuanced meaning through language – when we listen to speech, we can comprehend the meanings of up to tens of thousands of words and do so seamlessly across remarkably diverse concepts and themes,” says Harvard University neuroscientist Ziv Williams.

“We… wanted to find how humans are able to process such diverse meanings during natural speech and through which we are able to rapidly comprehend the meanings of words across a wide array of sentences, stories, and narratives.”

Harvard University neuroscientists Mohsen Jamali and Benjamin Grannan and their colleagues used tungsten ‘lab-on-a-chip’ microelectrode arrays and neuropixels to record brain activity on a cellular level in the prefrontal cortex of 13 participants while they listened to individual sentences and stories.

It took recording surprisingly few neurons in this part of the brain – one involved in speech formation and working memory – for the researchers to be able to loosely ‘mind read’ general meanings in the patterns of cellular activity.

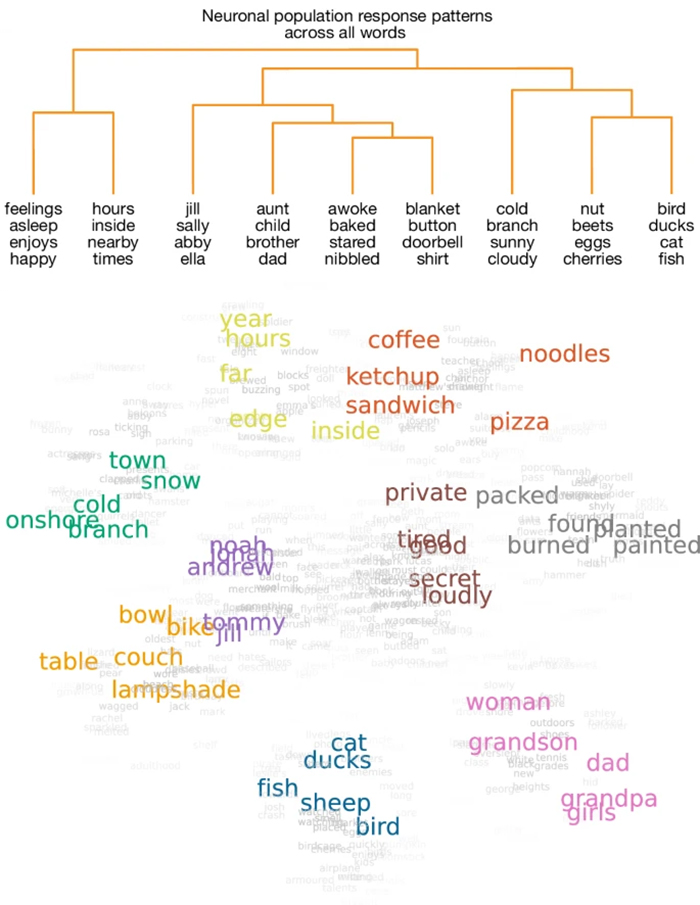

The recordings revealed words that share similar meanings like noodles and pizza create similar patterns of activity within participants’ brains and that these patterns differ substantially when hearing words that have disparate meanings such as duck and coffee.

“We found that while certain neurons preferentially activated when people heard words such as ran or jumped, which reflect actions, other neurons preferentially activated when hearing words that have emotional connotations, such as happy or sad,” explains Williams.

“When looking at all of the neurons together, we could start building a detailed picture of how word meanings are represented in the brain.”

What’s more, the patterns of neuron activity in response to a word sound depends on what came before and after too.

“Rather than simply responding to words as fixed stored memory representations, these neurons seemed to adaptively represent word meanings in a context-dependent manner during natural speech processing,” the team writes in their paper.

This is what allows us to distinguish between homophones – words that sound the same but have different meanings like ‘I’ and ‘eye’.

The researchers also identified hints of the hierarchies that would allow us to rapidly shift through words with similar meanings as we hear them.

“By being able to decode word meaning from the activities of small numbers of brain cells, it may be possible to predict, with a certain degree of granularity, what someone is listening to or thinking,” says Williams.

“It could also potentially allow us to develop brain-machine interfaces in the future that can enable individuals with conditions such as motor paralysis or stroke to communicate more effectively.”

The researchers are now keen to explore if the meaning maps they’ve discovered in our brains remain similar in response to written words, pictures or even different languages, as well as what other parts of the brain may be involved.

This research was published in Nature.