Products You May Like

We’re seeing major advancements in tech that can decode brain signals, interpreting neural activity to reveal what’s on someone’s mind, what they want to say, or – in the case of a new study – which song they’re listening to.

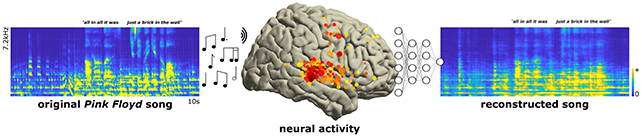

US researchers have been able to reconstruct a “recognizable version” of a Pink Floyd song based on the pulses of activity moving through a specific part of the brain’s temporal lobe in volunteers as they listened to the hit Another Brick in the Wall Part 1.

While the tune in question did go through some initial processing into a spectrogram form to be more compatible with the brain’s audio processing techniques, the reverse process is impressive in terms of its fidelity.

“We reconstructed the classic Pink Floyd song Another Brick in the Wall from direct human cortical recordings, providing insights into the neural bases of music perception and into future brain decoding applications,” says neuroscientist Ludovic Bellier from the University of California, Berkeley.

Bellier and colleagues wanted to look at how brain patterns might map to musical elements like pitch and harmony and ended up discovering that a part of the brain’s auditory complex called the superior temporal gyrus (STG) is linked to rhythm. It seems that this area in particular is important in terms of perceiving and understanding music.

To gather the necessary brain activity data, the team recruited 29 people who already had brain electrodes implanted to help manage their epilepsy. Across all the participants, a total of 2,668 electrodes were monitored for neural patterns while they were listening to Pink Floyd.

All of this data was then analyzed via machine learning, through what’s known as a regression-based decoding model. In simple terms, computer algorithms looked for correlations between the music being played and what was going on in the brain.

Through that learning process, the researchers could then reverse the system and identify Another Brick in the Wall through how the brain was responding to it. The reconstructed track is a little muddy and distorted, but it’s not hard to tell what the song is.

This contributes to ongoing efforts to better decode brain patterns and improve brain-machine interfaces.

Imagine being able to restore music perception for those with brain damage, for example, or those who have lost the power of speech being able to think the words they want to say, and the pitch, tone, and lyrical flow of those words, too.

“For example, the musical perception findings could contribute to development of a general auditory decoder that includes the prosodic elements of speech based on relatively few, well-located electrodes,” write the researchers in their published paper.

The research has been published in PLOS Biology.