Products You May Like

Researchers have demonstrated how to keep a network of nanowires in a state that’s right on what’s known as the edge of chaos – an achievement that could be used to produce artificial intelligence (AI) that acts much like the human brain does.

The team used varying levels of electricity on a nanowire simulation, finding a balance when the electric signal was too low when the signal was too high. If the signal was too low, the network’s outputs weren’t complex enough to be useful; if the signal was too high, the outputs were a mess and also useless.

“We found that if you push the signal too slowly the network just does the same thing over and over without learning and developing. If we pushed it too hard and fast, the network becomes erratic and unpredictable,” says physicist Joel Hochstetter from the University of Sydney and the study’s lead author.

Keeping the simulations on the line between those two extremes produced the optimal results from the network, the scientists report. The findings suggest a variety of brain-like dynamics could eventually be produced using nanowire networks.

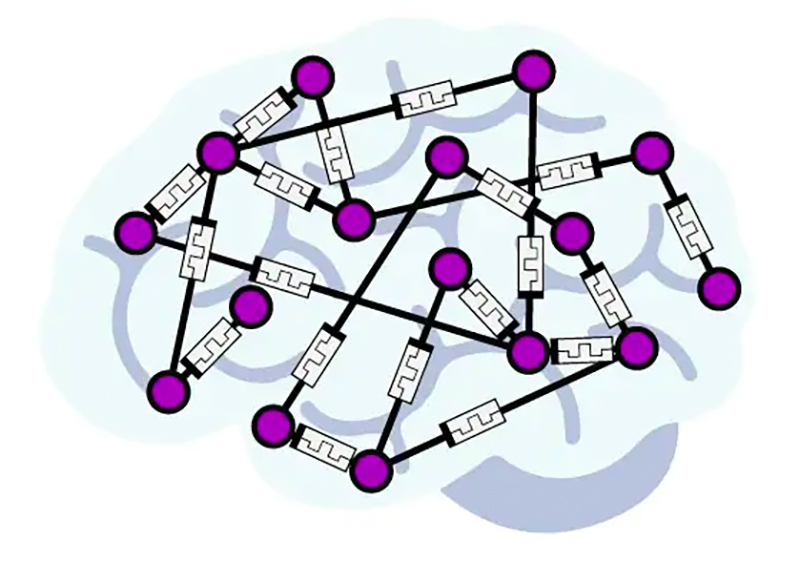

Conceptual image of randomly connected switches. (Alon Loeffler)

Conceptual image of randomly connected switches. (Alon Loeffler)

“Some theories in neuroscience suggest the human mind could operate at this edge of chaos, or what is called the critical state,” says physicist Zdenka Kuncic from the University of Sydney in Australia. “Some neuroscientists think it is in this state where we achieve maximal brain performance.”

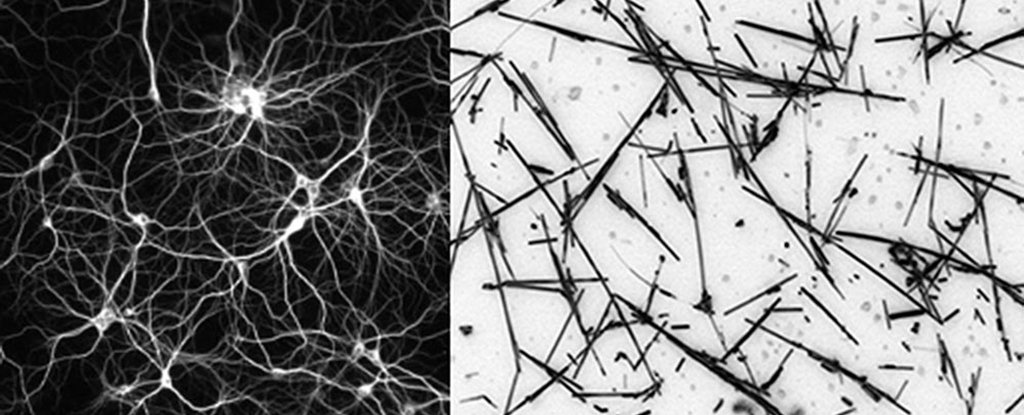

For the simulations, nanowires 10 micrometers long and no thicker than 500 nanometers were arranged randomly on a two-dimensional plane. Human hairs can be up to around 100,000 nanometers wide, for comparison.

In this case, the problem the network was tasked with was transforming a simple waveform into a more complex type, with the amplitude and frequency of the electrical signal adjusted to find the optimal state for solving the problem – right on the edge of chaos.

Nanowire networks combine two systems into one, managing both memory (the equivalent of computer RAM) and operations (the equivalent of a computer CPU). They can remember a history of previous signals, changing their future output in response to what’s happened before, making them memristors.

“Where the wires overlap, they form an electrochemical junction, like the synapses between neurons,” says Hochstetter.

Typically, algorithms train the network on where the best pathways are, but in this instance, the network did it on its own.

“We found that electrical signals put through this network automatically find the best route for transmitting information,” says Hochstetter. “And this architecture allows the network to ‘remember’ previous pathways through the system.”

That in turn could mean significantly reduced energy usage, because the networks end up training themselves using the most efficient processes. As artificial intelligence networks scale up, being able to keep them lean and as low-powered as possible will be important.

For now, the scientists have shown that nanowire networks can do their best problem solving right on the line between order and chaos, much like our brain is thought to be able to, and that puts us a step closer to AI that thinks as we do.

“What’s so exciting about this result is that it suggests that these types of nanowire networks can be tuned into regimes with diverse, brain-like collective dynamics, which can be leveraged to optimise information processing,” says Kuncic.

The research has been published in Nature Communications.