Products You May Like

Facebook is testing a new conflict alerts feature that will inform admins when war erupts in the comments section, hoping to keep unhealthy conversations in check and make moderation easier. Facebook has been busy lately when it comes to combating toxicity and the spread of disinformation on its platform, encompassing everything from deepfakes to wild conspiracy theories.

In March, Facebook announced stricter rules governing conversations on its platform, which is used by roughly three billion people each month. The updated guidelines dictate that users will be warned prior to joining a group that has been reported for violating community standards and that content from such groups will not be promoted in the News Feed. If repeat violations happen, a group risks being removed. As for users transgressing the safe conversation rules, Facebook can restrict their ability to post, comment, or invite other people in groups, or even create a new one depending upon the severity of their action.

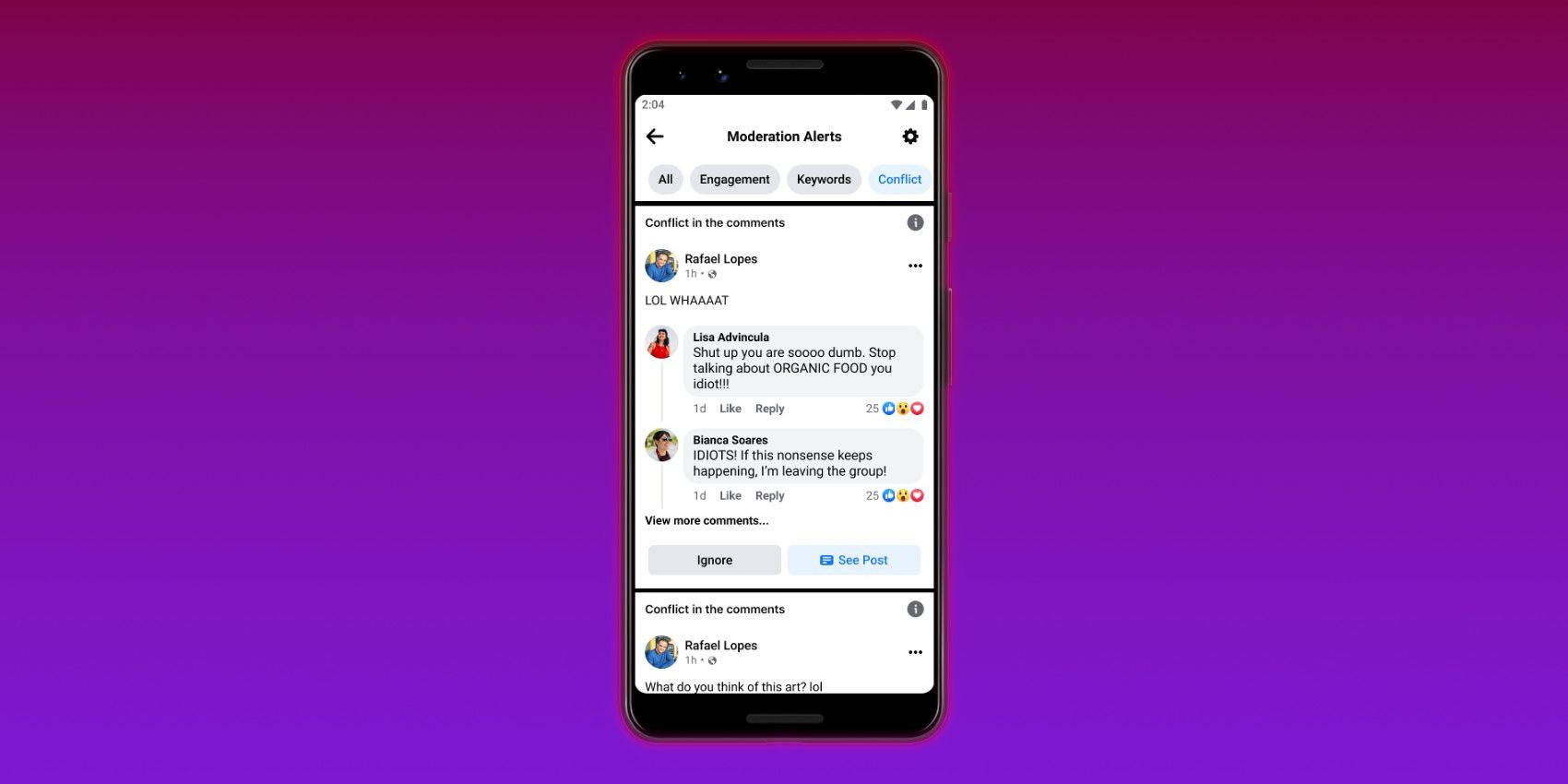

Now, Facebook plans to use AI to detect when conversations in the comments section get heated and that might require intervention from the group admin or moderator to cool things down. Called conflict alerts, the social media juggernaut is currently testing this feature that notifies admins when “contentious or unhealthy conversations” happen in a group. However, the company has not revealed technical details about how this automated conflict detection alert springs into action.

Facebook Seeks To Make Moderation Less Daunting

Facebook already has a policy in place that downranks groups breaking its community guidelines, and has created a tiered system of reducing the member activity privilege when they violate policies. To make group moderation even more efficient, the company is also announcing a new dashboard called Admin Home that puts all controls in one place. Another automated trick called ‘pro-tips’ is also making its way to the platform. As the name indicates, it will dole out useful suggestions for admins to effectively manage group interactions.

More importantly, Facebook is adding an extra dash of automation to the moderation process. The new comment moderation tools in Admin Assist will allow an admin to create criteria governing posts, comments, and member participation. For example, admins can now set rules that automatically block comments from users with a policy violation record and also enforce certain ground rules that reduce promotional and spammy content in a group. There will also be a new option that lets an admin specify how often group members can comment on a per-post basis. And to ensure that group admins can easily weed out miscreants, Facebook is also rolling out a member summary feature that will allow an admin to vet their social media activity and decide they’re fit to be included in a group. This member summary will include a record of posts, comments, and history of policy violations in the past, if any.

But an AI-driven system is prone to faults and that’s why Facebook will allow group admins to submit an appeal for reviewing incidents of policy violations by a member. Furthermore, to ensure that rules around conversations in a group are easily accessible for all members, admins will now be able to post these rules as a comment or separate post. Adding another layer of convenience, Facebook will allow admins to pin a comment with the requisite information to the top of a group post as well. The latest steps being taken by Facebook are reassuring, especially in times when issues such as COVID-19 vaccine misinformation have become a new battlefront for social media platforms.

Source: Facebook

About The Author