Products You May Like

Facebook may be reconfiguring its News Feed algorithms. After being grilled by lawmakers about the role that Facebook played in the attack on the U.S. Capitol, the company announced this morning it will be rolling out a series of News Feed ranking tests that will ask users to provide feedback about the posts they’re seeing, which will later be incorporated into Facebook’s News Feed ranking process. Specifically, Facebook will be looking to learn which content people find inspirational, what content they want to see less of (like politics), and what other topics they’re generally interested in, among other things.

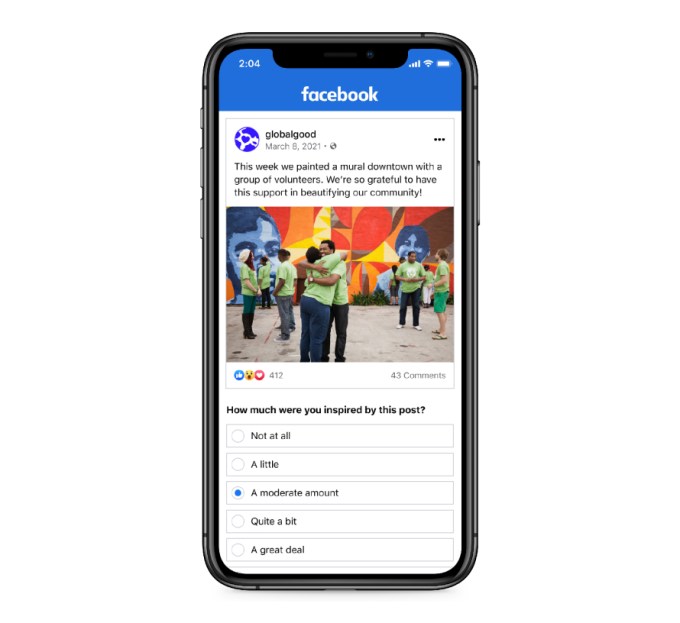

This will be done through a series of global tests, one of which will involve a survey directly beneath the post itself which asks, “how much were you inspired by this post?,” with the goal of helping to show more people posts of an inspirational nature closer at the top of the News Feed.

Image Credits: Facebook

Another test will work to the Facebook News Feed experience to reflect what people want to see. Today, Facebook prioritizes showing you content from friends, Groups and Pages you’ve chosen to follow, but it has algorithmically crafted an experience of whose posts to show you and when based on a variety of signals. This includes both implicit and explicit signals — like how much you engage with that person’s content (or Page or Group) on a regular basis, as well as whether you’ve added them as a “Close Friend” or “Favorite” indicating you want to see more of their content than others, for example.

However, just because you’re close to someone in real life, that doesn’t mean that you like what they post to Facebook. This has driven families and friends apart in recent years, as people discovered by way of social media how people they thought they knew really viewed the world. It’s been a painful reckoning for some. Facebook hasn’t managed to fix the problem, either. Today, users still scroll News Feeds that reinforce their views, no matter how problematic. And with the growing tide of misinformation, the News Feed has gone from just placing users into a filter bubble to presenting a full alternate reality for some, often populated by conspiracies theories.

Facebook’s third test doesn’t necessarily tackle this problem head-on, but instead looks to gain feedback about what users want to see, as a whole. Facebook says that it will begin asking people whether they want to see more or fewer posts on certain topics, like Cooking, Sports, or Politics, and more. Based on users’ collective feedback, Facebook will adjust its algorithms to show more content people say they’re interested in, and fewer posts about topics they don’t want to see.

The area of politics, specifically, has been an issue for Facebook. The social network for years has been charged with helping to fan the flames of political discourse, polarizing and radicalizing users through its algorithms, distributing misinformation at scale, and encouraging an ecosystem of divisive clickbait, as publishers sought engagement instead of fairness and balance when reporting the news. There are now entirely biased and subjective outlets posing as news sources who benefit from algorithms like Facebook’s, in fact.

Shortly after the Capitol attack, Facebook announced it would try clamping down on political content in the News Feed for a small percentage of people in the U.S., Canada, Brazil and Indonesia, for period of time during tests.

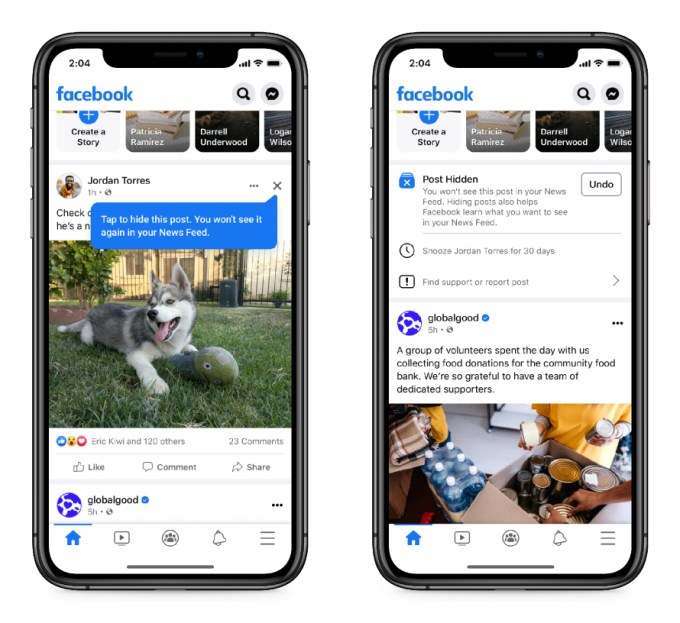

Now, the company says it will work to better understand what content is being linked negative News Feed experiences, including political content. In this case, Facebook may ask users on posts with a lot of negative reactions what sort of content they want to see less of.

It will also more prominently feature the option to hide posts you find “irrelevant, problematic or irritating.” Although this feature existed before, you’ll now be able to tap an X in the upper-right corner of a post to hide it from the News Feed, if in the test group, and see fewer like in the future, for a more personalized experience.

It’s not clear that allowing users to pick and choose their topics is the best way to solve the larger problems with negative posts, divisive content or misinformation, though this test is less about the latter and more about making the News Feed “feel” more positive.

As the data is collected from the tests, Facebook will incorporate the learnings into its News Feed ranking algorithms. But it’s not clear to what extent it will be adjusting the algorithm on a global basis versus simply customizing the experience for end users on a more individual basis over time.

The company says the tests will run over the next few months.